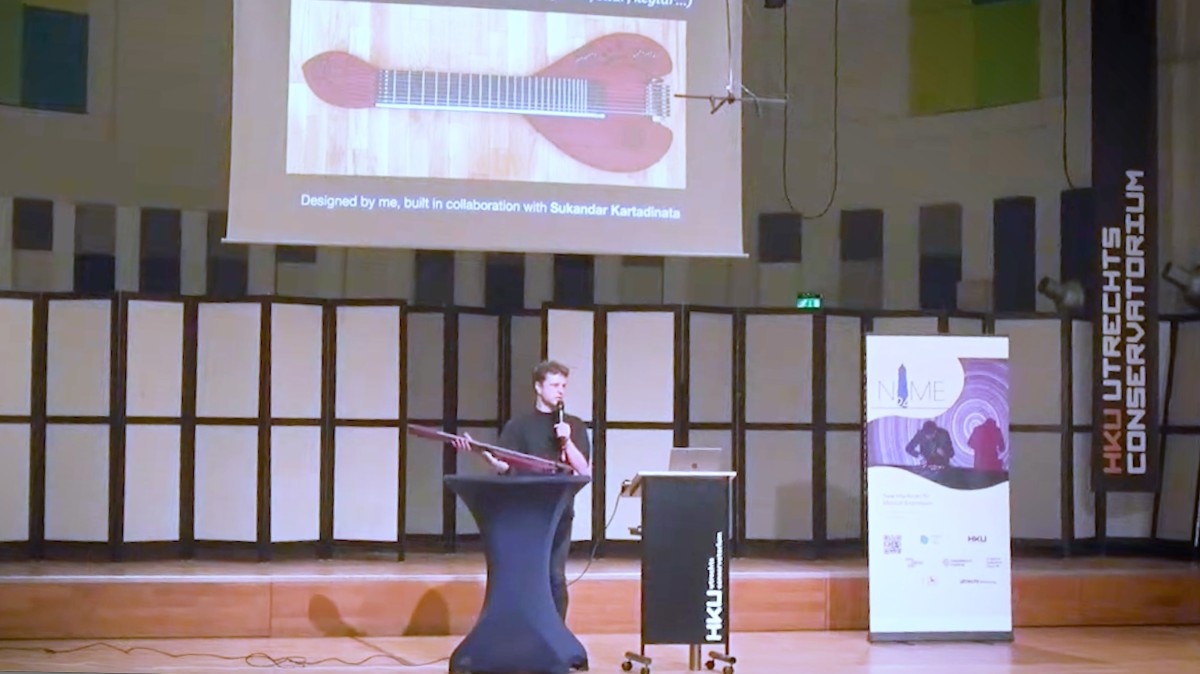

Next week is NIME week! This year I am not going – Canberra is way too far from Berlin – but I finally got around uploading my presentation from last year’s conference though, which took place in Utrecht, the Netherlands. Features the Sophtar and a somewhat pretentious digression about magic, ergodynamics, and a quote from a book by Benjamin Labatut I was reading on my way there. Here is the paper!

Author: _FV

-

TCP/Indeterminate Place quartet performs Reconciliation at Studio Acusticum

On Monday 9 June 2025 the TCP/Indeterminate Place quartet performed Reconciliation, a piece we composed in 2024 for a special double duo telematic performance in which two of us performed at the Kapelle der Versöhnung in Berlin and the other two at the Orgelpark in Amsterdam. This time we were all at the Studio Acusticum main concert hall, playing our instruments and interacting with the massive Acusticum/University pipe organ in several ways. I experimented with the pressure aftertouch of the Sophtar as a way of adding and subtracting stops to the registration of the IV manual of the organ. Here is short snippet I recorded with my phone during sound check.

TCP/Indeterminate Place is:

Mattias Petersson: parsimonia, live coding, hyperorgan

Stefan Östersjö: midi electric guitar, hyperorgan

Robert Ek: augmented clarinet, hyperorgan

Federico Visi: sophtar, hyperorganOur performance was a prelude Mattias Petersson’s successful PhD defence titled “The Act of Patching” that took place the following day.

-

Videos from the Embedding Algorithms Workshop

I have made a YouTube playlist with videos from the Embedding Algorithms Workshop.

The event took place in July 2024 at Universität der Künste Berlin, we brought together a community of artists, researchers, and practitioners and had a great time discussing the practice of embedding algorithms in the arts with presentations, exhibitions, and performances. Enjoy!

Full playlist:

-

Sophtar live at the 2025 Guthman Musical Instrument Competition

Video of the performance at the 2025 Guthman Musical Instrument Competition, Georgia Tech Ferst Center For The Arts, 8 March 2025.

Jeff Albert: trombone;

Sukandar Kartadinata & Federico Visi: Sophtar -

The Sophtar at the Guthman Musical Instrument Competition

The Sophtar has been selected as one the ten finalists in this year’s Guthman Musical Instrument Competition.

Together with Sukandar Kartadinata, we will bring the instrument to the Georgia Tech campus in Atlanta (US) to present it to the competition’s judges and participate in a public performance involving local Atlanta musicians.

I designed the Sophtar and built it in collaboration with Kartadinata. At the Intelligent Instruments Lab, the Sophtar was extended with actuators, on-board DSP, and machine learning models to make it respond to the player in ways that are not easy to predict yet meaningful and inspiring.

Read more about the Sophtar here and check out all the finalists in this year’s compeition here.

The Sophtar has been developed with the support of the GEMM))) research cluster at Luleå University of Technology, the Intelligent Instruments Lab at University of Iceland, the European Research Council, the Swedish Research Council, the Boström Fund, and the Helge Ax:son Johnsons foundation.

-

Wilding AI Lab, CTM 2025

Next week I will take part in the Wilding AI Lab:

this four-day lab will assemble a group of participants selected via open call to learn about the application of generative AI in spatial audio, and collectively explore the wilder territories of AI. Shaped as a mix of theoretical and hands-on components, the lab runs 23 – 25 January, and culminates with a public presentation session Sunday 26 January.

The lab will take place at MONOM, a unique performance venue and spatial audio studio housed at Funkhaus Berlin. It consists in a 3D array of omnidirectional speakers arranged on columns and subwoofers placed underneath the audience, beneath an acoustically transparent floor.

MONOM (Ph: Becca Crawford, from 4dsound.net.) I am pretty excited about experimenting with the Sophtar there, particularly given that each of its eight strings and the audio synthesis machine learning models can be routed to separate output channels, making it possible to place each sound source in a different point in space.

The Sophtar (Ph: Federico Visi). I look forward to meeting the other participants in person. Engaging with a community of practitioners, share skills and ideas, and take part in an open critical discussion on AI- and data-driven tools is crucial to address the rapid changes affecting our cultural landscape. I believe it is also very important to open the process to the public in order to demystify the black boxes, promote critical thinking, spread knowledge, and offer new narratives, so I am excited at the prospect of an open lab on the fourth day. As in Maurice Jones’ words during our first collective Zoom meeting “the design of how we gather is essential.”

Participants

- Daniel Limaverde

- Evangeline Y Brooks

- Federico Visi

- Gadi Sassoon

- Hyeji Nam

- Irini Kalaitzidi

- Nico Daleman

- Ninon and Jun Suzuki

- SENAIDA

- Three Amps

- Transient Cat

- TWEE

Hosts

-

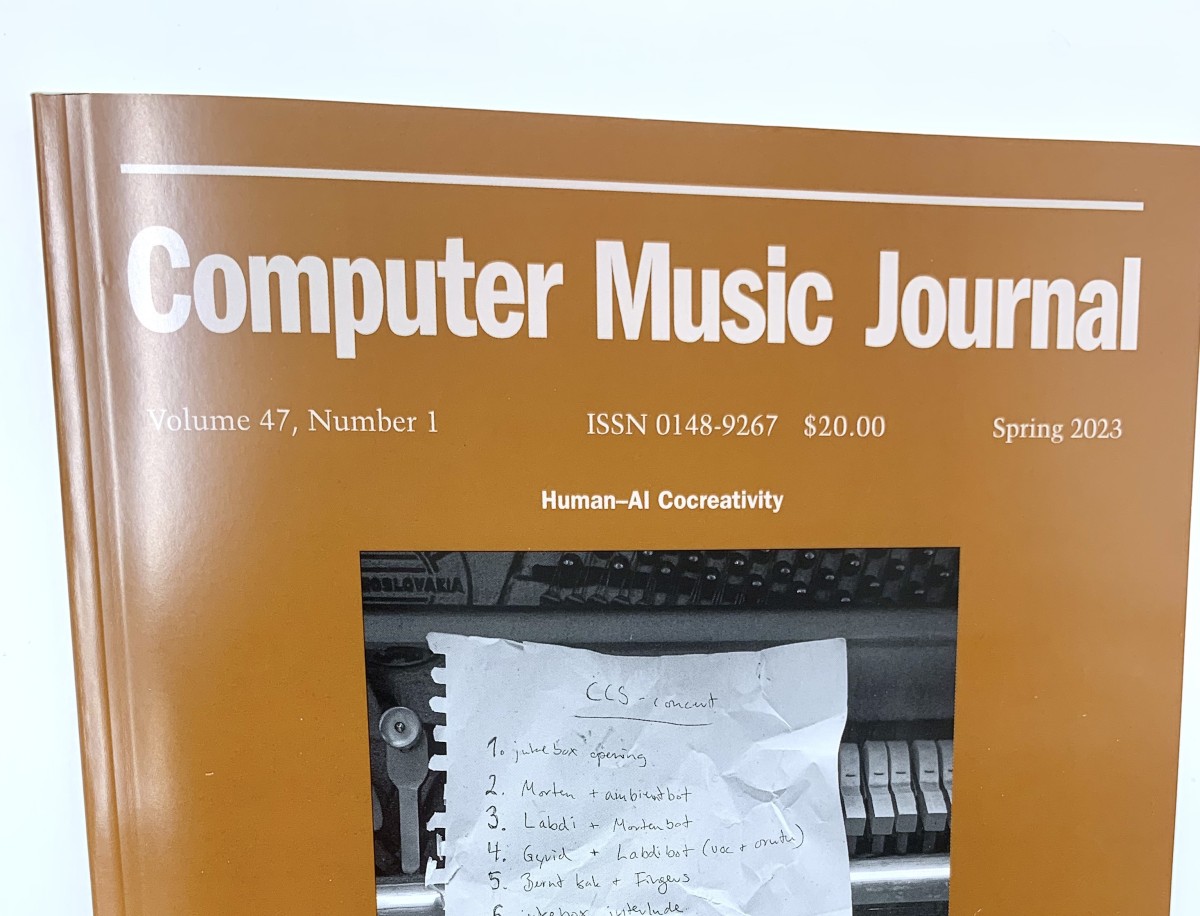

Computer Music Journal article: “An End-to-End Musical Instrument System That Translates Electromyogram Biosignals to Synthesized Sound”

I finally put my hands on the physical copy of the Computer Music Journal issue on Human-AI Cocreativity that includes our open-access article An End-to-End Musical Instrument System That Translates Electromyogram Biosignals to Synthesized Sound.

There we report on our work on an instrument that translates the electrical activity produced by muscles into synthesised sound. The article also describes our collaboration with the Chicks on Speed on the performance piece Noise Bodies (2019), which was part of the Up to and Including Limits: After Carolee Schneemann exhibition at Muzeum Susch.

I co-authored the article with Atau Tanaka, Balandino Di Donato, Martin Klang, and Michael Zbyszyński, here is the abstract:

This article presents a custom system combining hardware and software that senses physiological signals of the performer’s body resulting from muscle contraction and translates them to computer-synthesized sound. Our goal was to build upon the history of research in the field to develop a complete, integrated system that could be used by nonspecialist musicians. We describe the Embodied AudioVisual Interaction Electromyogram, an end-to-end system spanning wearable sensing on the musician’s body, custom microcontroller-based biosignal acquisition hardware, machine learning–based gesture-to-sound mapping middleware, and software-based granular synthesis sound output. A novel hardware design digitizes the electromyogram signals from the muscle with minimal analog preprocessing and treats it in an audio signal-processing chain as a class-compliant audio and wireless MIDI interface. The mapping layer implements an interactive machine learning workflow in a reinforcement learning configuration and can map gesture features to auditory metadata in a multidimensional information space. The system adapts existing machine learning and synthesis modules to work with the hardware, resulting in an integrated, end-to-end system. We explore its potential as a digital musical instrument through a series of public presentations and concert performances by a range of musical practitioners.

-

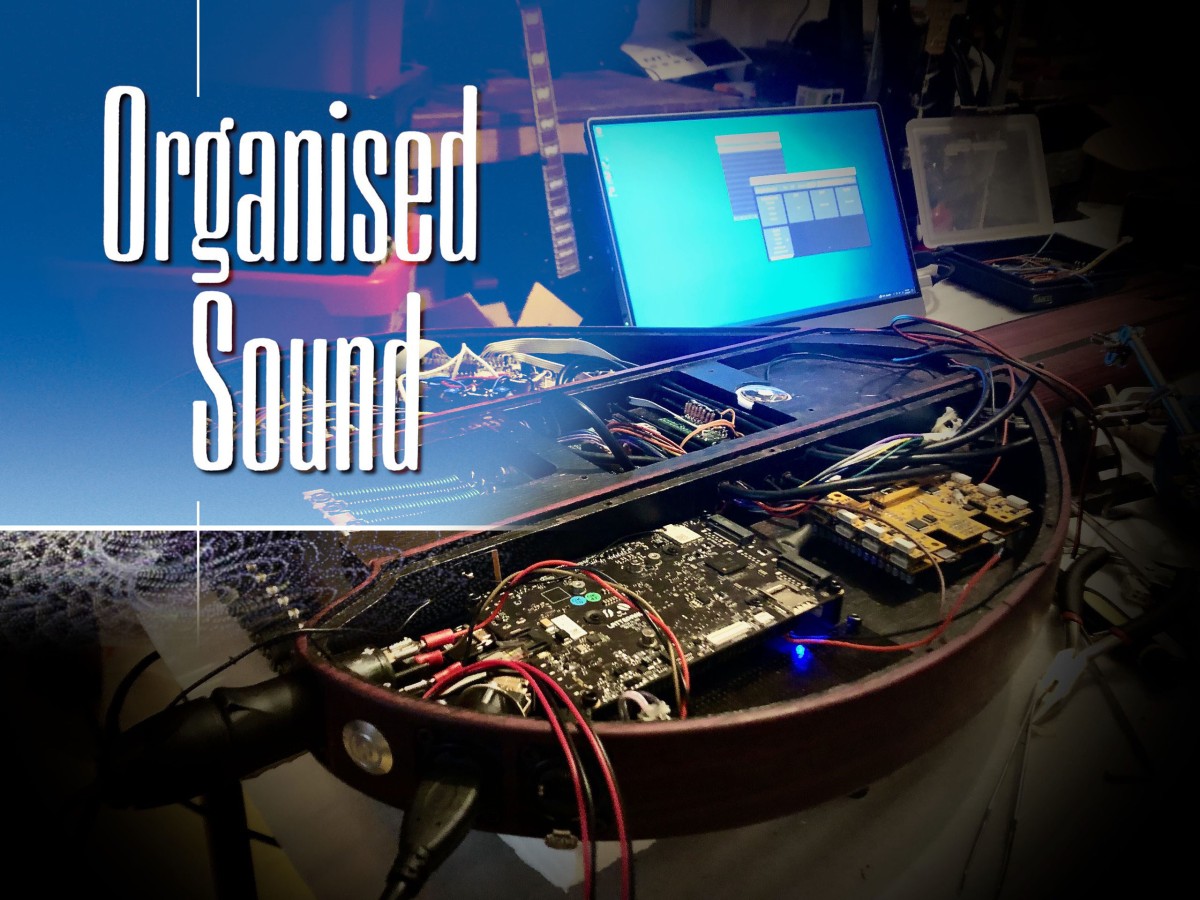

Organised Sound – Call for papers: Embedding Algorithms in Music and Sound Art

Excited to announce that I am co-editing with Thor Magnusson a thematic issue of Organised Sound on the topic of Embedding Algorithms in Music and Sound Art.

Please find the full call below and on the journal’s website.The idea of a journal edited issue came after the Embedding Algorithms Workshop that took place at the Berlin Open Lab in July 2024.

Feel free to share this call with others who might be interested in contributing.We look forward to receiving your submissions!

Call for Submissions – Volume 31, Number 2

Issue thematic title: Embedding Algorithms in Music and Sound Art

Date of Publication: August 2026

Publishers: Cambridge University Press

Issue co-ordinators: Federico Visi (mail@federicovisi.com), Thor Magnusson (thormagnusson@hi.is)

Deadline for submission: 15 September 2025Embedding Algorithms in Music and Sound Art

Embedding algorithms into physical objects has long been part of sound art and electroacoustic music practice, with sound artists and researchers creating tools and instruments that incorporate some algorithmic process that is central to their function or behaviour. Such practice has evolved profoundly over the years, touching many aspects of sound generation, electroacoustic composition, and music performance.Whilst closely linked to technology, the practice of embedding algorithms into tools for sound making also transcends it. Different forms and concepts emerge in domains that can be analogue, digital, pertaining to recent or ancient technologies, tailored around the practice of a single individual or encompassing the behaviours of multiple players, even non-human ones.

The processes and rules that can be inscribed into instruments may include assumptions about sound and music, influencing how these are understood, conceptualised, and created. Algorithms can encode complex behaviours that may make the instrument adapt to the way it is being played or give it a degree of autonomy and agency. These aspects can have profound effects on the aesthetics and creative processes of sound art and electroacoustic music, as artists are given the possibility of delegating some of the decisions to their tools.

In the practice of embedding algorithms, knowledge and technology from other disciplines are appropriated and repurposed by sound artists in many ways. Sensors and algorithms developed in other research fields are used for making instruments. Similarly, digital data describing various phenomena – from environmental processes to the global economy – have been harnessed by practitioners for defining the behaviour of their sound tools, resulting in data-driven sound practices such as sonification.

More recently, advances in artificial intelligence have made it possible to embed machine learning models into instruments, introducing new aesthetics and practices in which curating a dataset and training a model are part of the artistic process. These trends in music and sound art have sparked a broad discourse addressing notions of agency, autonomy, authenticity, authorship, creativity and originality. There are aesthetic, epistemological, and ethical implications arising from the practice of building sound-making instruments that incorporate algorithmic processes. How do intelligent instruments affect our musical practice?

This demands an interdisciplinary critical enquiry. For this special issue of Organised Sound we seek articles that address the aesthetic and cultural implications that designing and embedding algorithms has on electroacoustic music practice and sound art. We are interested in the role of this new technological context on musical practice.

Please note that we do not seek submissions that describe a project or a composition without a broad contextualisation and an underlying central question. We instead welcome submissions that aim at addressing one or more specific issues of relevance to the call and to the journal’s readership which may include works or projects as examples.

Topics of interest include:

- Aesthetics in performance of instruments with embedded algorithms

- Phenomenology of electroacoustic performance with algorithmic instruments

- Agency, autonomy, intentionality, and “otherness” of algorithmic instruments

- Co-creation and authorship in creative processes involving humans and algorithmic instruments

- Dynamics of feedback, adaptivity, resistance, and entanglement in player-instrument-algorithm assemblages

- Human-machine electroacoustic improvisation

- Critical reflections on the role of algorithms in the creation of sound tools and instruments and their impact on electroacoustic music practice

- Meaning making, symbolic representations, and assumptions on music and sound embedded within instruments and sound tools

- Knowledge through instruments: epistemic and hermeneutic relations in algorithmic instruments

- Appropriation, repurposing, and “de-scripting” of hardware, algorithms, and data by sound artists

- Exploration of individual and collective memory through algorithmic instrumental sound practice

- Roles and understandings of the machine learning model as a tool in instrumental music practice

- Use of algorithmic instruments in contexts informed by historical and/or non-Western traditions

- Ethics, sociopolitics, and ideology in the design of algorithmic tools for electroacoustic music

- Legibility and negotiability of algorithmic processes in electroacoustic music practice

- Non-digital approaches: embedding algorithms in non-digital instruments

- Postdigital and speculative approaches in electroacoustic music practice with algorithmic instruments

- Data as instrument in electroacoustic music practice: curating and embedding data into instruments

- Aesthetics and idiosyncrasies of networked, site-specific, and distributed instruments and performance environments

- Entanglement, inter-subjectivity, and relational posthumanist paradigms in designing and composing with algorithmic instruments

- More-than-human, inter-species, and ecological approaches in algorithmic instrumental practice

Furthermore, as always, submissions unrelated to the theme but relevant to the journal’s areas of focus are always welcome.

SUBMISSION DEADLINE: 15 September 2025

SUBMISSION FORMAT:

Notes for Contributors including how to submit on Scholar One and further details can be obtained from the inside back cover of published issues of Organised Sound or at the following url: https://www.cambridge.org/core/journals/organised-sound/information/author-instructions/preparing-your-materials.General queries should be sent to: os@dmu.ac.uk, not to the guest editors.

Accepted articles will be published online via FirstView after copy editing prior to the full issue’s publication.

Editor: Leigh Landy; Associate Editor: James Andean

Founding Editors: Ross Kirk, Tony Myatt and Richard Orton†

Regional Editors: Liu Yen-Ling (Annie), Dugal McKinnon, Raúl Minsburg, Jøran Rudi, Margaret Schedel, Barry Truax

International Editorial Board: Miriam Akkermann, Marc Battier, Manuella Blackburn, Brian Bridges, Alessandro Cipriani, Ricardo Dal Farra, Simon Emmerson, Kenneth Fields, Rajmil Fischman, Kerry Hagan, Eduardo Miranda, Garth Paine, Mary Simoni, Martin Supper, Daniel Teruggi, Ian Whalley, David Worrall, Lonce Wyse

-

The Sophtar

The Sophtar is a tabletop string instrument with an embedded system for digital signal processing, networking, and machine learning. It features a pressure-sensitive fretted neck, two sound boxes, and controlled feedback capabilities by means of bespoke interface elements. The design of the instrument is informed by my practice with hyperorgan interaction in networked music performance.

I built the Sophtar in collaboration with Sukandar Kartadinata. I presented and performed with it at NIME 2024, here is the paper from the conference proceedings.

At IIL I am working on extending the Sophtar with actuators and machine learning models to make it respond to my playing in ways that are not easy to predict yet meaningful and inspiring. In particular, I am working on:

- an extension that allows the instrument to self-play by means of solenoids,

- embedding notochord models,

- per-string filtering for harmonic feedback.

I see the research and development work on the Sophtar as a way to probe and engage with broader research questions on musical improvisation and co-creativity with machines and algorithms.

I will present the Sophtar at an Open Lab on Friday, 27th September, 15:00-17:00.

Playing the Sophtar at LNDW 2024 Photo: Christian Kielmann. EDIT: here is a video of the performance at the 2025 Guthman Musical Instrument Competition, Georgia Tech Ferst Center For The Arts, 8 March 2025.

Jeff Albert: trombone;

Sukandar Kartadinata & Federico Visi: SophtarThe Sophtar has been developed with the support of the GEMM))) research cluster at Luleå University of Technology, the Intelligent Instruments Lab at University of Iceland, the European Research Council, the Swedish Research Council, the Boström Fund, and the Helge Ax:son Johnsons foundation.

-

Intelligent Instruments Lab, Iceland

My family and I temporarily left Berlin and relocated to Reykjavik, where I am going to join the Intelligent Instrument Lab (IIL) at the University of Iceland. There, I am going to work on extending the capabilities of the Sophtar, the musical instrument I designed to address the needs stemming from my artistic practice involving hyperorgans, networked music performance, and interactive machine learning. I built the Sophtar in collaboration with Sukandar Kartadinata and I describe it more in detail in this NIME 2024 paper. I very much look forward to working with Thor Magnusson and the other super talented researchers at IIL, starting in just a few days!